Building Flash Multiplayer Games

This tutorial is also available in a pure flash version..

| Table of contents | |

|---|---|

| 1 - Introduction | 8 - Synchronization |

| 2 - Game Basics | 9 - Interpolation |

| 3 - Turn Based Games | 10 - Latency |

| 4 - Network Architectures | 11 - Tips & Tricks |

| 5 - Security | 12 - Game: Turn Based |

| 6 - Example Game | 13 - Game: Realtime uninterpolated |

| 7 - Real Time Games | 14 - Game: Realtime interpolated |

Synchronization

Synchronization is the act of getting all game instances to be as close to each other as possible. In layman's terms, we are trying to make the same thing show up on all players' screens. We will ignore the fundamental paradoxes caused by latency for now in order to introduce the tools with which we keep the sync.

| Event Synchronization | State Synchronization |

|---|---|

|

|

Both of these are important to the successful and efficient multiplayer game.

Before we can understand the pros and cons of each of these, we must first look at the constraints that we operate within. The two considerations we must make are for bandwidth and latency.

Recall that we are making a flash game, something that should be playable at school or work with a sub-par machine on a very poor network. I often see people try and compare to works such as Halo or Team Fortress 2 and say they are going to make something as smooth as that.

But, the technology that you have available doesn't even compare. Normal, commercial real-time games will use UDP connections on higher end machines, we are stuck with TCP on low end machines.

If TCP or UDP sounds like gibberish to you, fear not, you are in the right place.

TCP and UDP are two different data transfer protocols with different attributes. I am simplifying to a very large degree, but the basic difference is that TCP assures delivery by using data handshaking (it sends a message back to tell the sender it received that packet) while UDP does not (and thus is prone to packet loss) but is much faster and has less overhead.

Of course, you don't really have to worry about making a decision between the two protocols because it has been made for you! Flash only allows the sending of TCP packets so TCP is what we're stuck with using. This creates an interesting setup. Almost no developer, given the choice, would chose TCP over UDP when they wish to make a real-time game. But, we have guaranteed data delivery (hooray) and so even though we lack in power, this setup will indeed be kinder in some ways to us. We need not worry about packet loss or other network complications.

Of course, you remember those two key players we just mentioned earlier? Bandwidth and latency are both adversely effected by TCP.

We've already taken a decent look at latency and the problems associated with it.

It is suffice to say we'd like latency to be as low as possible as it would minimize latency problems. TCP doesn't actually hurt latency too badly under normal conditions. In fact, we should expect it to operate just as well as UDP in most cases. TCP becomes a liability when something goes wrong. If a TCP packet gets lost in the networks, another packet will be sent to compensate. This is how transmission is guaranteed. A second feature of TDP is that it guarantees in-order reception of packets. This means packets will be stalled until the missing packet is recovered. This will hold up the line for all packets and thus increase your latency.

The effect of this is, your ping will be fairly decent for the majority of the time, but you will experience spikes in latency that can be very dangerous.

Our second restriction is bandwidth. Bandwidth is the amount of information that can be sent through the network.

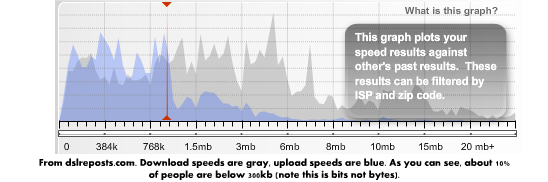

Before we do anything, it would be good to know how much we can transfer, just as a base amount. This statistic is finicky and up to interpretation. Do we say that the maximum bandwidth we should use is 56k because that's the lowest anyone uses anymore? For most any game with multiple things happening, that is a very restrictive limit to enforce.

Here is some speed data pulled off the web. (august 2009)

For argument's sake, set us say we chose a max rate of 300kb/s in order to accommodate 90% of people.

How do we calculate our game is within this rate? We must start to count bits. The size of each data type is as follows (before unknown data overhead):

int or unit: 4 bytes

String: (# of chars) + 1 bytes

Number: up to 7 bytes

Boolean: 1 byte

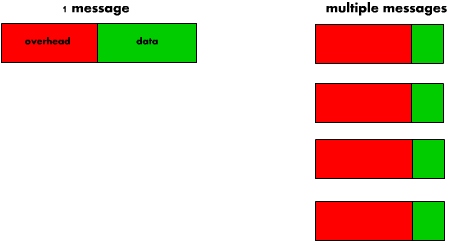

So, we add up the size of our message type and all the data we send with it. Are we done? Not quite. You see, each message requires a TCP and ethernet header so that it can get to where it's going. The TCP header, unfortunately, is much bigger then it's UDP counterpart and the total header (including the ethernet header as well)consists of at least 84 bytes and can be much larger (generally around 120). This is huge compared to our data!

So, we need to minimize the number of messages sent and maximize the amount of data per message.

State synchronization

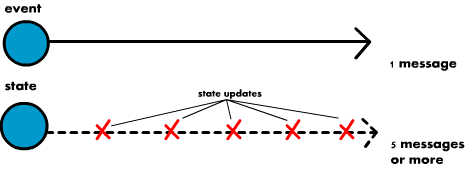

Now that we have a basic understanding of our network limitations, we look again at our methods of synchronization. State synchronization is where you send an object's current state across the network (at fixed intervals such as 100 ms)

Essentially, every time you send a message for state synchronization, you send a snapshot of the game world. Of course, we shouldn't send 30 messages a second to keep up with the frame rate of the game. That is too expensive! Plus, we would be needlessly wasting bandwidth and even then (due to variation in latency) we wouldn't get perfect and smooth synchronization.

One of the great things about state synchronization, however, is that we can really chunk up our messages and minimize the large amounts of inefficiency caused by message overhead.

Instead of sending separate messages such as we might do in a turn-based game, we can stuff all the information into one message and thus evade the deadly repeated overhead cost.

Of course, state synchronization is not without it's flaws. If we rely on this method alone, we must wait until the next update to send information. This means we can add up to 100 ms or so to the time it takes for a player to receive a message.

In a situation where these fractions of a second matter, this can be very detrimental.

Event synchronization

If you are trying to aim at another player who is moving or someone shoots at you, you want to know this information ASAP. This is why we require event synchronization.We accomplish event synchronization by sending information immediately every time an event occurs that is important and time dependant enough that it merits sending an independent message and shouldering the extra overhead..

Of course, depending on the game, this might actually lead to better efficiency. It all depends on the frequency with which these events occur. If you send messages every time a player presses a key, you can get relatively good synchronization just from that alone. Although, small inaccuracies will build up quickly and state updates are still needed to fix these innate errors.

If we are keeping a relatively good synchronization from just sending inputs, it is logical that we can reduce the number of state updates that we need. State updates can be as much as halved by combining the two techniques together! If the number of bytes generated by key press messages is less then the amount you save from not sending the (often bulky) state updates then it is a better solution for your game (different games require different solutions).

In fact, under closer scrutiny, state updates are very much a brute-force way of doing things while event updates can be much more elegant. For example, take a look at the following comparison. We only send a single message to signify movement to the right, compared to the 5 messages to simulate it state-wise.

If looked at from a worst case scenario, event synchronization can be dangerous. If a user presses keys rapidly enough he could in-advertently flood the network (or do it on purpose, as a network attack. It's even worse if he has a macro).

To combat this, you can set a hard cap to the number of event messages sent by sending them in bursts. This will solve the flooding issue above, in exchange for a slight added delay to the messages. To accomplish this, we set up a very fast timer (around 25 ms) and send any events that have occurred in this time period in one single message (if there are no events, we don't send a message). This also helps us reduce the added overhead by sending them in a single message.

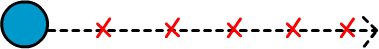

Now that we've looked at both methods of synchronization, we can see what it looks like when we combine the two. We get an entity that reacts to input and then periodically updates it's position with the true value. A dramatization (the errors in position are exaggerated for demonstrations sake) looks like this:

Time stamps

There is yet another very damaging factor that we must address. You are NOT guaranteed that all instances of the game are going to be running at the same speed. For example, different players might get different frame rates and throw you off if you're using enterFrame.

Well then, why not just use timers instead? Timers are unreliable as well. The time between timer ticks is nowhere near constant and the timer does not attempt to make up lost time.

So, what do we do? We cannot have players seeing objects moving on their screens at different rates from each other or (even worse) at a different rate from the server. It's a correctional nightmare! Every state update would result in players being dragged into proper position.

So, we must have some reliable way of making all clients go at the same speed.

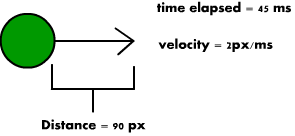

What we want is to make all actions depend on real time. In other words, we want to base our actions on the number of ms passed since our last update.

Flash has two ways of computing this: getTimer() and Date.getTime(). getTimer() will return you the number of ms since the game began while Date.getTime() will return an actual time (taken from your system clock) in ms. I would suggest that you use Date.getTime() due to the fact that getTimer() can become inaccurate over long periods of time.

But how do we use this? Quite simply, record the sampled time at each game tick then on the next tick, the time elapsed = (the game time) - (the old time). Multiplying this by your velocity in ms will give you the distance you travel. Beware, however, that system clocks can be slightly inaccurate and different from each other. Thus, this is a very good approximate only (the best we've got).

In addition, we may want to stamp our messages with the time. Fluctuations in latency make it difficult (if not impossible) to tell how long ago a message was actually sent unless you stamp it. If we get a message earlier or later then expected, it could seriously throw off what we see on the screen.

So, we must think about the timing of our messages and how to make it clear when they were sent. If we do this, we can correct for the early or late message by using either a sinple linear extrapolation or recording data from the past and queueing events to happen in the future. (The latter being more difficult and computationally expensive, but more reliable)

Of course, if a message is late, the damage has already been done. We would have had to predict what would happen until that message arrived. If the information is not what we expected, we've got serious error that has build up on our screen. Knowing how much we are off by (by knowing the timestamp) doesn't help eliminate the fact that we will need to do a large correction.

A solution to this dilemma is proposed in the tips and tricks section.

Regardless of timestamp correction, the problem still exists that the player jumps around every time he receives a state update. In the next chapters we will discuss interpolation (smoothing in between two known points) and extrapolation (prediction based on what you already know)

First, however, here is a sample implementation of event and state synchronization (and simplistic timestamps) using the PlayerIO API.

| Table of contents | |

|---|---|

| 1 - Introduction | 8 - Synchronization |

| 2 - Game Basics | 9 - Interpolation |

| 3 - Turn Based Games | 10 - Latency |

| 4 - Network Architectures | 11 - Tips & Tricks |

| 5 - Security | 12 - Game: Turn Based |

| 6 - Example Game | 13 - Game: Realtime uninterpolated |

| 7 - Real Time Games | 14 - Game: Realtime interpolated |

About the author

Ryan Brady is a Canadian developer who is currently going to the university of Waterloo. When he isn't fooling around with friends or dealing with an ungodly amount of course work, he likes to design and build games.

Two of the things Ryan likes most above all else are sarcasm and irony. Thus, he likes other people when they have a sense of humor and don't take offence at playful stabs and teasing. Otherwise, people tend to think Ryan hates them and/or the entire universe.

Subscribing to the "Work hard, Play hard" mindset, Ryan is always on the move. There are not enough hours in the day to do everything he wants to do (which is quite a bit). As a habit, Ryan designs more things then he has time to make. Alphas and proofs of concepts are his bread and butter though he always wishes he could make them into full games.

Every so often, you will see Ryan with a full beard on his chin. This commonly refereed to as Schrodinger's beard as you never know if Ryan will have it when you see him next. He re-grows it in under a week.

Every 4 months, Ryan has a co-op term so he moves around quite a bit. He might be living in Canada one month then be living in San Fran the next. You will never know when Ryan will be at large in your area next (until it is too late).